Stable Diffusion and DALL-E AI Image Generators in UberCreate

AI image generation is the process of creating realistic images from text, sketches, or other inputs using artificial intelligence (AI) models. AI image generators have many applications, from digital art to marketing, and can enhance storytelling, web design, and multimedia projects. However, not all AI image generators are created equal.

In this article, we will explore two of the most advanced and innovative AI image generation models: Stable Diffusion and DALL-E. We will compare their capabilities, features, and technologies, and show how they can unleash your creativity with the power of prompt engineering.

We will also discuss the open source landscape, the ethical considerations, and the future trends in AI image generation technology.

Both Stable Diffusion and DALL-E stand at the forefront of a digital renaissance, transforming how we conceive and create art. These generative AI models have democratized artistic expression, enabling anyone with a computer to generate stunning visuals from simple text prompts. This shift not only expands the toolkit of digital artists but also invites individuals from all walks of life to explore their creativity without the need for traditional artistic skills.

Finally, we will explore UberCreate, an All in One AI Tool that merges DALL-E and Stable Difussuion in one and How it benefits you.

What Sets Stable Diffusion and DALL-E Apart in the interest of AI Image Generation?

Stable Diffusion and DALL-E are two of the most recent and impressive AI image generation models, released by Stability AI and OpenAI respectively. Both models use a text-to-image approach, which means they can generate images from natural language descriptions. However, they differ in their architectures, methods, and results.

- Stable Diffusion 3 is a diffusion model, which means it generates images by gradually refining them from random noise. It uses a diffusion transformer to learn the reverse process of adding noise to an image, and then applies it in reverse to generate a new image from a text prompt. Stable Diffusion can generate high-quality images up to 256×256 pixels in size, and can handle complex and diverse prompts. It can also perform inpainting, which means it can fill in missing parts of an image given an input image and a text prompt.

- DALL-E 3 is a transformer model, which means it generates images by encoding the text prompt and decoding it into an image. It uses a large language model called GPT-3 as the text encoder, and a deconvolutional neural network as the image decoder. DALL-E can generate images up to 64×64 pixels in size, and can produce multiple images for the same prompt. It can also perform zero-shot image generation, which means it can generate images for prompts that it has never seen before.

Comparing the Image Generation Model Capabilities of Stable Diffusion vs DALL-E

Stable Diffusion and DALL-E, while sharing the common goal of converting text to images, diverge significantly in their approach and output. DALL-E, developed by OpenAI, excels in closely following prompts to generate images that closely match the input text, often with a high degree of photorealism and accuracy. On the other hand, Stable Diffusion, backed by Stability AI, offers a broader range of artistic styles and the ability to fine-tune images through inpainting and outpainting, providing a more flexible tool for creative exploration.

Exploring the Unique Features of Each Generation Model

DALL-E’s integration with ChatGPT enhances its prompt-following capabilities, making it exceptionally user-friendly and efficient in generating the desired image on the first attempt. Conversely, Stable Diffusion’s open-source nature and the inclusion of features like ControlNet allow for a deeper level of customization and experimentation, catering to both novice users and experienced artists.

Stability AI vs OpenAI: The Technology Behind the Magic

At the heart of these models lie advanced machine learning techniques, with both leveraging variations of the transformer architecture to process and generate complex images from textual descriptions. The key difference lies in their accessibility and intended use; OpenAI’s DALL-E is part of a broader suite of AI tools available through subscription, while Stability AI emphasizes community involvement and innovation through its open-source approach.

What are the unique features of stable diffusion and DALL-E that set them apart in the realm of ai image generation?

While Stable Diffusion excels in providing control over image modification and affordability, DALL-E stands out for its user-friendly interface, consistent image quality, and natural language processing capabilities. The choice between these AI image generators ultimately depends on specific user preferences, goals, and the level of customization required for image generation tasks.

Stable Diffusion:

- Inpainting Capabilities: Allows users to adjust specific elements’ sizes in generated images or replace them, offering greater control over image modification.

- Offline Accessibility: Unlike other AI image generators, Stable Diffusion can be downloaded and used offline, providing flexibility in usage.

- Cost-Effective: Offers a basic plan starting at $9 per month, making it a more affordable option compared to DALL-E.

– Versatility and Power: Known for its ability to create high-quality images with a focus on user control and customization.

DALL-E:

- User-Friendly Interface: Requires a slightly lower level of technical expertise compared to Stable Diffusion, enhancing accessibility for users.

- Consistent Image Quality: Known for consistently generating high-quality and relevant images, ensuring reliable output.

- Natural Language Processing Capabilities: Enables users to interact conversationally with the model for refining prompts and generating images based on text inputs.

The limitations of Stable Diffusion and DALL-E in AI image generation are as follows:

While both models offer advanced capabilities in AI image generation, these limitations highlight areas where improvements or considerations are necessary to enhance user experience and output quality. Users should carefully assess these constraints based on their specific needs and preferences when selecting an AI image generator for their projects.

Stable Diffusion:

- Inconsistency in Image Quality: Stable Diffusion can sometimes be hit-or-miss in terms of image quality, leading to variability in the output.

- Technical Expertise Requirement: While user-friendly, Stable Diffusion may require a certain level of technical expertise for optimal usage, potentially posing a barrier for beginners.

- Resolution Constraints: The model may face challenges with deterioration and accuracy when user parameters deviate from the intended 512×512 resolution, affecting the quality of created images.

DALL-E:

- Limited Customization: DALL-E may have limitations in terms of customization compared to Stable Diffusion, potentially restricting users’ ability to tailor images to specific requirements.

- Ethical Considerations: Despite its consistent image quality, DALL-E faces challenges related to potential biases in generated images and the need for responsible usage to align with ethical practices.

- Complex Prompt Engineering: Users may encounter difficulties in crafting precise text prompts that accurately convey their desired imagery, impacting the quality and relevance of generated images.

The types of images that Stable Diffusion and DALL-E can generate differ in several aspects:

Stable Diffusion shines in rendering various styles, supporting inpainting and outpainting, and offering extensive customization options, DALL-E stands out for its ease of use, focus on abstract and painting-like images, and consistent high-quality output. The choice between these models depends on the user’s specific requirements, preferences, and the type of images they aim to generate.

Stable Diffusion:

- Rendering Styles: Stable Diffusion excels in rendering a variety of styles, particularly realistic photos, better than DALL-E out of the box.

- Inpainting and Outpainting: Stable Diffusion supports both inpainting (regenerating a part of the image while keeping the rest unchanged) and outpainting (extending the image while preserving the original content), giving users more control over image modification.

- Versatility: Users can refine every aspect of the image until it meets their standards, making Stable Diffusion suitable for artistic creation and customization.

DALL-E:

- Abstract and Painting-like Images: DALL-E is trained to produce more abstract or painting-like images, excelling in responding to less detailed or broader prompts effectively.

- Ease of Use: Known for its user-friendly interface and natural language processing capabilities, DALL-E offers a seamless experience for generating images based on simple and natural prompts.

- Consistent Image Quality: DALL-E is recognized for consistently generating high-quality and relevant images, ensuring reliable output for users.

Strengths and Weaknesses of Stable Diffusion and DALL-E for Specific Use Cases

Stable Diffusion:

Strengths:

- Inpainting Capabilities: Allows users to adjust specific elements in images or replace them, providing control over modifications.

- Offline Accessibility: Can be downloaded and used offline, offering flexibility in usage.

- Versatility: Known for rendering various styles, especially realistic photos, and supporting inpainting and outpainting, enabling extensive customization.

Weaknesses:

- Inconsistency in Image Quality: May sometimes deliver variable image quality, impacting the reliability of output.

- Technical Expertise Requirement: While user-friendly, it may require a certain level of technical expertise for optimal usage, potentially posing a barrier for beginners.

- Resolution Constraints: Challenges may arise when deviating from the intended resolution, affecting image quality.

DALL-E:

Strengths:

- User-Friendly Interface: Requires less technical expertise compared to Stable Diffusion, enhancing accessibility for users.

- Consistent Image Quality: Known for consistently generating high-quality and relevant images, ensuring reliable output.

- Natural Language Processing Capabilities: Excels in responding to less detailed or broader prompts effectively, making prompt engineering less critical.

Weaknesses:

- Limited Customization: May have limitations in customization compared to Stable Diffusion, potentially restricting users’ ability to tailor images to specific requirements.

- Ethical Considerations: Faces challenges related to potential biases in generated images and the need for responsible usage to align with ethical practices.

- Complex Prompt Engineering: Users may encounter difficulties in crafting precise text prompts that accurately convey their desired imagery, impacting image quality and relevance.

Stable Diffusion offers extensive customization options and versatility in rendering styles, DALL-E stands out for its ease of use and consistent high-quality output. The choice between these models depends on the specific use case requirements, user expertise level, and desired level of customization for image generation tasks.

Specific Use Cases Where Stable Diffusion Outperforms DALL-E:

- Inpainting Capabilities: Stable Diffusion excels in inpainting, allowing users to adjust specific elements in images or replace them, providing greater control over image modification.

- Versatility in Rendering Styles: Stable Diffusion has an advantage in rendering various styles, especially realistic photos, outperforming DALL-E in this aspect.

- Offline Accessibility: Unlike DALL-E, Stable Diffusion can be downloaded and used offline, offering users flexibility and privacy in image generation tasks.

- Customization and Control: Stable Diffusion allows users to refine every aspect of the image until it meets their standards, making it ideal for artistic creation and detailed customization compared to DALL-E.

- Cost-Effectiveness: With a basic plan starting at $9 per month, Stable Diffusion offers a more affordable option for users compared to DALL-E, making it accessible to a wider range of individuals.

In summary, Stable Diffusion outperforms DALL-E in specific use cases such as inpainting capabilities, versatility in rendering styles, offline accessibility, customization options, and cost-effectiveness. Users seeking greater control over image modification, diverse rendering styles, offline usage, detailed customization, and budget-friendly options may find Stable Diffusion more suitable for their image generation needs compared to DALL-E.

Some specific use cases where DALL-E outperforms Stable Diffusion include:

- User-friendliness: DALL-E requires less technical expertise compared to Stable Diffusion, making it more accessible to a broader audience.

- Consistent image quality: DALL-E is renowned for delivering high-quality and relevant images, ensuring reliable outcomes.

- Natural language processing capabilities: DALL-E excels in responding to less detailed or broader prompts effectively, making prompt engineering less critical.

- Speed: DALL-E is typically faster at producing images, often three to four times faster than Stable Diffusion under the same conditions.

However, it is important to note that Stable Diffusion offers advantages in specific use cases, such as inpainting capabilities, offline accessibility, customization options, and cost-effectiveness. Ultimately, the choice between DALL-E and Stable Diffusion depends on the specific use case requirements, user expertise level, and desired levels of customization and control for image generation tasks.

How to Harness the Power of Prompt Engineering for Stunning AI Art

Creating Effective Text Prompts for Desired Imagery

The art of prompt engineering involves crafting text inputs that guide the AI to produce specific visual outcomes. This requires a balance between specificity and creativity, ensuring the prompt is detailed enough to convey the desired concept while leaving room for the AI’s interpretative capabilities.

Understanding the Importance of Precise Language in Prompt Crafting

The choice of words in a prompt can significantly influence the generated image. Terms that describe not only the subject but also the style, mood, and context can lead to more accurate and visually appealing results.

Tips and Tricks for Optimizing Image Output Through Prompt Engineering

Experimentation is key; iteratively refining prompts based on previous outputs can help hone in on the perfect formulation. Additionally, leveraging the unique features of each model, such as DALL-E’s ability to generate variations or Stable Diffusion’s inpainting, can further enhance the creative process.

Navigating the Open Source Landscape: Stable Diffusion and its Impact

The Significance of Open-Source in Accelerating AI Image Generator Innovation

Stable Diffusion’s open-source model fosters a collaborative environment where developers and artists can contribute to its evolution, leading to rapid advancements and a diverse array of specialized models.

How to Get Started with Stable Diffusion's Open Source Model

Accessing Stable Diffusion is straightforward, with various guides and resources available to help users set up the model for personal or professional use. The community around Stable Diffusion also provides extensive support for newcomers.

Community Contributions and Enhancements to Stable Diffusion

The vibrant community surrounding Stable Diffusion has led to the development of numerous plugins, models, and tools that extend its capabilities, allowing for more nuanced control over the image generation process and opening up new avenues for creativity.

Exploring the Boundaries of Creativity: Use Cases for AI-Generated Images

AI-generated images have found applications across a wide range of fields, from digital art and marketing to web design and multimedia projects. The ability to quickly generate visuals from text prompts is revolutionizing content creation, enabling more dynamic storytelling and innovative design solutions.

Avoiding Common Pitfalls: Ethical Considerations in AI Image Generation

As AI art generators become more prevalent, concerns around copyright, creativity, and the potential for misuse rise. Both OpenAI and Stability AI have implemented safeguards to address these issues, but the ethical use of these tools remains a shared responsibility among users.

The Future of AI Art: Predictions and Trends in Image Generation Technology

The rapid advancement of AI image generators suggests a future where the collaboration between human creativity and AI tools becomes even more seamless. Anticipating further enhancements in prompt interpretation, image quality, and ethical safeguards, the boundary between AI-generated art and human-created art may continue to blur, heralding a new era of digital creativity.

In conclusion, Stable Diffusion and DALL-E represent two leading edges of the AI image generation frontier, each with its strengths and unique capabilities. As these technologies evolve, they promise to further democratize art creation, making it more accessible and versatile than ever before.

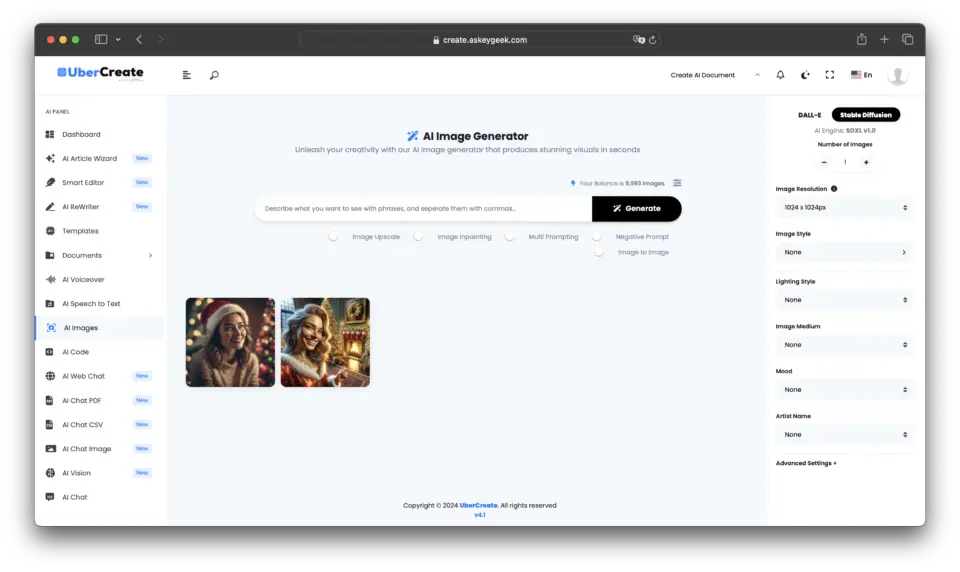

Integrating the Best of Both Worlds: UberCreate Merges Stable Diffusion and DALL-E

In the ever-evolving landscape of AI image generation, a new tool emerges that harnesses the combined power of the two most advanced AI models in the industry: UberCreate. This innovative platform brings together the capabilities of both Stable Diffusion and DALL-E, offering users an unparalleled experience in generating images from any description.

The Power of Combined AI Image Generators in UberCreate

UberCreate is a testament to the synergy that can be achieved when combining the strengths of different AI technologies. By integrating the precision of DALL-E with the flexibility of Stable Diffusion, UberCreate provides a comprehensive solution for anyone looking to generate images quickly and efficiently.

Pin

Pin Main Features and Functionalities of UberCreate

UberCreate boasts an intuitive interface that simplifies the process of creating visuals, making it accessible to users regardless of their technical expertise. With the ability to generate images from any description, the tool caters to a wide array of creative needs, from professional design work to personal art projects.

One of the standout features of UberCreate is its AI Vision Expert, which allows users to upload an image and receive a detailed breakdown of its content. This feature can identify objects, people, and places, as well as discern intricate details, providing valuable insights that can be used to refine the creative process.

The Benefits of Having Both Stable Diffusion and DALL-E in a Single Tool

Having both Stable Diffusion and DALL-E within UberCreate means that users can enjoy the best of both worlds. Whether they require the nuanced artistic styles that Stable Diffusion offers or the high-fidelity and prompt adherence of DALL-E, UberCreate can accommodate these needs seamlessly. This dual capability ensures that the images produced are not only visually stunning but also closely aligned with the user’s vision.

Moreover, the combination of these models in one tool significantly reduces the time and effort required to create visuals. Users can generate images in minutes, which would traditionally take hours to craft by hand or by using separate tools. This efficiency is invaluable for professionals who need to meet tight deadlines without compromising on quality.

Conclusion

UberCreate represents a significant leap forward in AI image generation, providing a versatile and powerful tool that leverages the combined strengths of Stable Diffusion and DALL-E.

Its user-friendly interface and advanced features make it an essential asset for artists, designers, and creatives who seek to push the boundaries of digital art and content creation.

With UberCreate, the possibilities are as limitless as the imagination, enabling users to bring their most ambitious visual concepts to life with ease and precision.

Frequently Asked Questions (FAQs)

What are the key differences between DALL-E and Stable Diffusion in the UberCreate tool?

The key differences between DALL-E and Stable Diffusion within the UberCreate tool lie in their text-to-image model capabilities and image embeddings techniques. DALL-E excels at generating photorealistic images from natural language descriptions with a refined inference process and enhanced dataset, making it superior for precise, caption-based image creation. In contrast, Stable Diffusion uses latent diffusion and clip image embeddings to offer more freedom in the diffusion process for creating abstract or stylized images directly from text-conditional image prompts.

How does the integration of DALL-E and Stable Diffusion improve AI-generated art in UberCreate?

By integrating both DALL-E and Stable Diffusion, UberCreate offers a versatile platform for AI-generated art. This combination allows users to leverage DALL-E’s capacity for generating photorealistic images based on detailed natural language descriptions and Stable Diffusion’s ability to produce unique, stylized art through its latent diffusion process. This ensures that no matter the complexity of the images you want to generate, UberCreate provides a comprehensive solution.

Can UberCreate's combined DALL-E and Stable Diffusion platform understand complex natural language inputs for image generation?

Yes, the combined platform of DALL-E and Stable Diffusion in UberCreate is designed to understand complex natural language inputs. DALL-E’s advanced text-to-image model and Stable Diffusion’s intelligent use of clip image embeddings and dataset allow the system to interpret and convert intricate descriptions into corresponding images, facilitating a seamless caption-to-image translation.

What advancements does Stable Diffusion offer over DALL-E 2 in UberCreate?

Stable Diffusion offers several advancements over DALL-E 2 within UberCreate, including more flexible control over the image generation process and enhanced image quality. Using stable diffusion techniques and improved image embeddings, Stable Diffusion can generate more abstract and stylistically diverse images than DALL-E 2’s text-to-image model. Additionally, the release of Stable Diffusion introduced a more efficient inference process, resulting in faster generation times and more refined images.

How does one use AI to seamlessly blend DALL-E and Stable Diffusion generated images in UberCreate?

To use AI for seamlessly blending DALL-E and Stable Diffusion generated images in UberCreate, users can employ the platform’s inpainting feature and its dataset capabilities. By specifying which aspects of the AI-generated art should come from either model, the tool intelligently uses image embedding techniques and the text-conditional image generation capabilities of both models to merge elements naturally. This blending is facilitated by the underlying AI’s understanding of image contexts and the subtle nuances of the original image details.

What is the significance of clip image embeddings in combining DALL-E and Stable Diffusion?

Clip image embeddings play a crucial role in combining DALL-E and Stable Diffusion, as they enable the AI to understand and interpret the content and style of images at a granular level. This is essential when blending images generated by both models in UberCreate, as it ensures that elements from different images are cohesively merged. Clip image embeddings leverage the AI’s understanding of the image content, facilitating a more accurate and natural integration of generated images.

Can UberCreate's DALL-E and Stable Diffusion generate images from the same natural language prompt differently?

Yes, when using the same natural language prompt, DALL-E and Stable Diffusion can generate distinctly different images due to their varying approaches to image generation. DALL-E focuses on creating precise, photorealistic images that closely match the given caption, leveraging its rich dataset and advanced text-to-image model. Conversely, Stable Diffusion might interpret the same prompt more abstractly or stylistically, taking advantage of its diffusion process and latent image generation techniques. This variance demonstrates the diverse creative potential of using AI within UberCreate.

How do DALL-E and Stable Diffusion manage to produce accurate and detailed images from vague prompts?

DALL-E and Stable Diffusion are designed to extract and utilize the most relevant information from even the vaguest of prompts, thanks to their advanced AI models and comprehensive datasets. DALL-E employs a sophisticated text-to-image model that can infer details and context that might not be explicitly mentioned in the prompt. Stable Diffusion, through its latent diffusion process and use of clip image embeddings, fills in the gaps by drawing on its extensive training on diverse image content. Together, they apply inference, creativity, and an understanding of natural language to generate detailed images that align with the user’s intent.